SciRenovation

Project OwnerDevelop graph neural network architectures specialized for inference path tasks Create embedding techniques that preserve logical relationships in vector space

DEEP Connects Bold Ideas to Real World Change and build a better future together.

Coming Soon

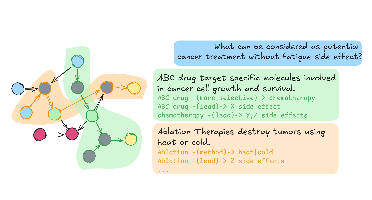

Our team is committed to addressing a critical challenge in graph-based AI systems: the lack of transparency and explainability. We're proposing a comprehensive framework for Natural-Language Explanation Generation (NLEG) in Graph Systems that can trace inference pathways and convert them into human-readable explanations. We're particularly excited about the potential impact on transparency, trustworthiness, and user adoption across various domains - with special attention to compatibility with existing graph database systems and knowledge frameworks.

This RFP seeks the development of advanced tools and techniques for interfacing with, refining, and evaluating knowledge graphs that support reasoning in AGI systems. Projects may target any part of the graph lifecycle — from extraction to refinement to benchmarking — and should optionally support symbolic reasoning within the OpenCog Hyperon framework, including compatibility with the MeTTa language and MORK knowledge graph. Bids are expected to range from $10,000 - $200,000.

We will develop and release a comprehensive inference path identification system capable of tracing reasoning chains in graph-based AI systems. This system will implement optimized graph traversal algorithms specifically designed for knowledge graphs and will include subgraph extraction techniques to handle complex reasoning paths. The system will be thoroughly tested with diverse graph datasets to ensure robustness and accuracy in path identification.

- A documented codebase implementing at least three specialized graph traversal algorithms optimized for inference path identification - A pattern matching subsystem for identifying structural configurations in knowledge graphs - A performance evaluation report comparing our system against current path identification methods using standard benchmark datasets

$25,000 USD

The system will successfully identify at least 90% of inference paths in test scenarios. The system will be able to process graphs with at least 100,000 nodes within reasonable time constraints.

We will build a sophisticated explanation generation system that transforms identified inference paths into clear natural language explanations. This system will combine template-based approaches with neural methods to generate explanations that are both accurate and natural-sounding. The explanations will be adaptable to different user expertise levels and will include appropriate domain terminology.

- A modular explanation generation system supporting multiple verbalization strategies - A library of domain-specific templates for at least three domains (e.g. biomedical financial educational) - An interactive demonstration tool allowing users to explore different explanation styles for the same inference path

$30,000 USD

The system will generate explanations that achieve human ratings of at least 4/5 for clarity and accuracy in blind evaluation studies. Generated explanations will successfully incorporate domain-specific terminology and adapt to different user expertise levels as measured through comprehension tests.

We will develop a multi-dimensional evaluation framework for assessing explanation quality that goes beyond traditional metrics. This framework will include both automated metrics and user-centered evaluation methodologies to provide a holistic assessment of explanation effectiveness. The framework will be designed to be reusable across different explanation generation systems.

- A suite of automated metrics measuring explanation fidelity, linguistic quality, and information completeness - A standardized protocol for user-centered evaluation covering comprehension, trust, and task performance - A benchmark dataset of explanations with human annotations for training and evaluation purposes

$25,000 USD

The evaluation framework will demonstrate high inter-rater reliability and will identify meaningful differences between explanation generation techniques that correlate with user preferences.

We will develop robust integration capabilities between our explanation tools and the Metta/MORK systems in the OpenCog ecosystem. This integration will allow for transparent reasoning explanations within Metta's computational framework and MORK's knowledge representation system.

- A dedicated integration module connecting our explanation system to Metta/MORK data structures and workflows - A set of Metta-specific explanation templates optimized for MORK's knowledge representation

$7,500 USD

The integrated system will successfully generate explanations for at least 75% of inference paths in Metta/MORK without requiring modifications to existing Metta/MORK implementations.

Reviews & Ratings

Please create account or login to write a review and rate.

Check back later by refreshing the page.

© 2025 Deep Funding

Join the Discussion (0)

Please create account or login to post comments.