Patrick Nercessian

Project OwnerLead the delegation of tasks and oversight of progress on each milestone. Utilize prior experience with advanced generative AI techniques to guide and inform on structural decisions of the evolution.

DEEP Connects Bold Ideas to Real World Change and build a better future together.

Coming Soon

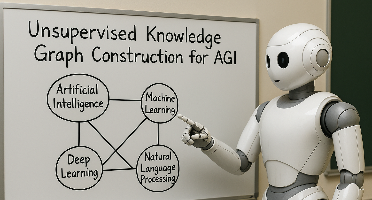

The goal of this project is to create a framework that can construct and expand knowledge graphs on the Hyperon platform and, secondly, utilize these knowledge graphs during large language model (LLM) inference to improve factual grounding during responses. We plan to construct a knowledge graph from an initial knowledge base, such as Wikipedia, and expand upon it via an iterative intelligent search into more detailed source materials, such as textbooks and research papers. The expanded graph will serve as a source of reliable truth to reduce the rate of LLM hallucinations during AGI reasoning tasks requiring multi-hop retrieval and other forms of semantically complex question answering.

This RFP seeks the development of advanced tools and techniques for interfacing with, refining, and evaluating knowledge graphs that support reasoning in AGI systems. Projects may target any part of the graph lifecycle — from extraction to refinement to benchmarking — and should optionally support symbolic reasoning within the OpenCog Hyperon framework, including compatibility with the MeTTa language and MORK knowledge graph. Bids are expected to range from $10,000 - $200,000.

Submit a thorough research plan outlining and detailing the approach and work to be done.

Detailed research plan with review of current literature agile breakdown of tasks with proposed timelines scoping document with functional/nonfunctional requirements and framework design report of selected benchmarks/evaluative sets.

$30,000 USD

Completion of relevant documentation for scoping and project planning. Literature review prepares for deep understanding and manipulation of RFP-specific tooling and concepts, such as the MORK repository and current SOTA techniques for agentic knowledge graph interfacing. The literature review should also cover selection of applicable benchmark/test sets for evaluating reasoning capacity of the new framework from multiple dimensions.

Complete initial development of the knowledge graph framework with the demonstrated ability to construct domain-specific knowledge graphs from a structured source and ability for the LLM agent to traverse the graph to find necessary information. Where possible we will integrate with MeTTa MORK and other Hyperon frameworks. Run initial benchmarks evaluating how our system improves on vanilla LLM systems.

Initial implementation of graph creation framework initial implementation of Knowledge Graph Inference Agent initial testing results and analysis against standard benchmarks

$40,000 USD

Ability to generate knowledge graphs from a source concept node using an LLM agent, ability for an LLM agent to walk an existing knowledge graph, benchmarking and testing runs without errors

Further development of the knowledge graph framework to include the ability to extend the knowledge graph with new information from unstructured sources. Where possible we will integrate with MeTTa MORK and other Hyperon frameworks. Run the same benchmarks to evaluate how the system improves on Milestone 2.

Further implementation of graph creation framework related testing results and analysis against standard benchmarks

$60,000 USD

Ability to extend knowledge graphs from Milestone 2 with new knowledge extracted from unstructured data sources using LLM agents, benchmarking, and testing

Run additional experiments such as how fine-tuning reinforcement learning and multi-agent consensus improves capabilities. Evaluate on multiple benchmarks and perform ablations to determine distinct improvements of each subsystem.

Expanded implementation new benchmark results report including rationale for extended experiment prioritization or deferral based on previously conducted literature review

$40,000 USD

Presentation of results of Milestone 2 experiments with augmented experimental groups

Submit all final materials as committed to in the grant proposal.

Final report with performance analysis code framework demonstration documentation.

$30,000 USD

Report is able to communicate methods to a degree of detail wherein someone with proper qualifications and resources could repeat all experiments. Explanations and presentation of results are thorough such that anyone familiar with relevant AI topics would be able to comprehend them.

Reviews & Ratings

Please create account or login to write a review and rate.

Check back later by refreshing the page.

© 2025 Deep Funding

Join the Discussion (0)

Please create account or login to post comments.