Andres Alvarez

Project OwnerPrincipal Investigator and System Architect, leading the design, integration, and strategic direction of the neuro-symbolic AGI kernel, with expertise in AI, finance, and legal-tech systems.

DEEP Connects Bold Ideas to Real World Change and build a better future together.

Coming Soon

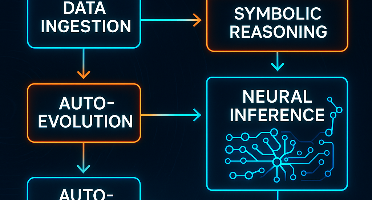

Self‑Optimizing Neuro‑Symbolic Deep Learning Framework, that fuses symbolic reasoning with neural inference under a fully autonomous paradigm. Ingests and cleans diverse data streams, applies hybrid planning and inference, and continuously self‑tunes its network topologies and hyperparameters. Integrated code‑generation engine autonomously produces new model components and test suites to sustain ongoing improvement. Validate industrial‑grade robustness by enforcing end‑to‑end latency targets (<200 ms), 100 % data‑integrity checks, and >90 % test coverage. Over a six‑month schedule for integration, scalable GPU clusters, and fault‑injection drills—delivering a ready‑for‑production system.

This RFP invites proposals to explore and demonstrate the use of neural-symbolic deep neural networks (DNNs), such as PyNeuraLogic and Kolmogorov Arnold Networks (KANs), for experiential learning and/or higher-order reasoning. The goal is to investigate how these architectures can embed logic rules derived from experiential systems like AIRIS or user-supplied higher-order logic, and apply them to improve reasoning in graph neural networks (GNNs), LLMs, or other DNNs. Bids are expected to range from $40,000 - $100,000.

This milestone will focus on designing and integrating the foundational components of the neuro-symbolic system: logic-based inference, high-performance neural processing, and adaptive control mechanisms. It includes the full specification of the symbolic reasoning engine (supporting predicate logic, graph-based traversal, and causal operators) and its interface with GPU-accelerated DNN modules for real-time data inference. Additionally, we will deploy the orchestration layer that allows for self-tuning and internal architecture evolution. This layer monitors system metrics and adjusts hyperparameters, batch sizes, or even model structure dynamically to maintain high accuracy and throughput. A modular plug-in interface will also be built to facilitate future extensions, such as meta-learning units or quantum hybrids. The milestone serves as the architectural backbone for the full AGI prototype. The outputs of this phase will enable logical reasoning and adaptive learning within the same system for the first time in our stack. It sets the stage for subsequent auto-generation, benchmarking, and self-healing capabilities.

Symbolic Reasoning Engine v1.0 Core module capable of executing basic logical operations (AND/OR/XOR), conditional rules, and predicate logic queries over structured semantic inputs. Integrates a custom lightweight logic parser for traceability and transparent execution logs. Neural Interface Bridge Bidirectional data bridge between symbolic modules and the neural network layers (transformers, CNNs or custom DNNs). Includes a tensor-to-symbol pipeline and symbol-influenced neural gating logic. Orchestration Layer v0.9 Monitors performance metrics (latency, accuracy, GPU load) and adjusts model architecture parameters on-the-fly. Incorporates plugin hooks for future modules (LLM, Meta-Controllers, Quantum Hybrids). Technical Blueprint Documentation Full architectural diagram Component-level interaction protocols (API + data schemas) Logical graph composition specification for modular expansion All deliverables will be version-controlled, open to audit, and prepared for smooth handover to Phase 2 (Auto-Generative Layer & Benchmarks).

$22,000 USD

Functional Integration The symbolic core is able to reason over structured input and influence the behavior of the neural inference system (e.g., by activating/deactivating subnetworks or changing routing paths based on logical conditions). Logical outputs can be traced and visualized alongside neural predictions. Runtime Adaptation Engine The orchestration layer reacts to system metrics by adjusting hyperparameters such as learning rates, batch sizes, and inference precision (e.g., float32 to float16) to maintain optimal performance. Demonstrated successful adaptation to three pre-defined data drift scenarios with <5% performance drop. Module Modularity Each component must be independently callable via a defined API, making them testable and replaceable. This supports future integration of quantum inference or meta-learning modules without reengineering core logic. Documentation Completeness Blueprint documentation must be clear enough for third-party developers to understand the architecture, data flow, and extension points. Includes test coverage >85% and logs for 3 performance scenarios (standard, degraded, auto-recovered). By achieving these results, we ensure the system moves from concept to operational foundation with a resilient, evolvable AGI-ready structure.

Milestone 2 focuses on implementing autonomous generation of system components, dynamic benchmarking, and robustness mechanisms. Building on the foundational logic–neural architecture established in Milestone 1, this phase introduces a self-generative layer that uses a lightweight large language model (LLM) to produce functional code, test cases, and network modifications based on runtime needs or evolution protocols. We will also deploy a complete benchmarking suite that continuously evaluates inference latency, symbolic-neural synchronization, resource usage (CPU/GPU/VRAM), and accuracy across multiple test environments. This infrastructure will be critical to validate self-evolving behaviors and ensure SLA compliance in real-time settings. Furthermore, we will implement the fault detection and recovery subsystem. This includes automated rollback on model degradation, fallback strategies for logic-structure failures, and drift monitoring to preemptively restructure models. The combination of auto-generation, metrics-driven validation, and self-healing makes the system not only adaptive but self-reinforcing—able to improve and correct itself across time, usage, and contexts, in alignment with the AAA (Auto-evolutive, Auto-adaptive, Auto-generative) paradigm.

Auto-Generative Framework v1.0 Transformer-powered LLM engine capable of generating: Code snippets for evolving logic/neural layers Unit and integration test cases for new components Interface wrappers for symbolic-neural API expansions Engine includes feedback loop from system diagnostics to generation prompt tuning. Benchmarking & Evaluation Suite Modular benchmarking stack with integrated test harness for: Latency (<200ms), throughput, and precision Symbolic vs neural synchronization fidelity Auto-evolution effectiveness over N iterations Includes visual dashboards and metric storage. Resilience Engine v1.0 Rollback logic that detects performance regressions and reverts to prior stable models Fallback execution graph if symbolic layer fails or becomes incoherent Drift detection module for model retraining or evolution triggers System-wide Documentation Pack Benchmarking methodology, performance logs, thresholds Diagrams for fault recovery flow and trigger architecture Generation flow and language model integration map

$27,998 USD

Fully Functional Auto-Generative Subsystem The LLM component generates syntactically and functionally valid code in >90% of prompts. Successfully generates test coverage that integrates and passes for newly evolved neural-symbolic modules. Benchmarking Framework Validation Benchmarks are executed automatically after each model change, evolution, or environmental change. Real-time dashboard reflects latency, accuracy, resource usage, and auto-adaptation effectiveness. Robust Recovery & Self-Healing System detects critical performance degradation (>10% threshold drop) and rolls back to last best checkpoint autonomously. Symbolic and neural inference fallbacks engage under defined failure conditions with ≤5s switch time. Model drift over 3 test datasets is detected and prompts internal retraining/evolution within one cycle. Comprehensive Documentation All auto-generated components are stored, versioned, and mapped to their generation triggers. Benchmarking and fault recovery protocols are replicable by third-party developers. Includes complete performance profiles across at least 3 use-case scenarios. By completing this milestone, the system will evolve from a static hybrid framework into a self-generating, self-validating, and self-healing AGI-ready infrastructure, with clear pathways to industrial application and open research extension.

The final milestone focuses on deploying the complete neuro-symbolic AGI system in a reproducible and scalable format. This includes packaging the core into a containerized or modular deployment (e.g., Docker + Kubernetes), enabling external users to replicate, test, and extend the system. In this phase, we will conduct formal evaluations of system performance across multiple use cases, including symbolic reasoning tasks, dynamic adaptation to concept drift, and real-time inference under load. Results will be compiled into a performance report and peer-reviewed technical whitepaper. We will also activate the external interfaces: public documentation portal, example applications (demos), and usage guides. The final goal is to empower researchers, developers, and institutions to build upon this foundation by providing transparency, modularity, and a clear roadmap for future contributions. This milestone also marks the official submission of open-source components (as applicable), secure versioning of core elements, and readiness for integration with SingularityNET or similar AGI infrastructures.

Deployable Neuro-Symbolic Kernel v1.0 Packaged architecture via container (Docker/K8s compatible) with CLI and/or API control Includes config templates, usage presets, and ready-to-run examples All subsystems (reasoning, evolution, orchestration, recovery) integrated and callable Formal Performance Report Evaluation across benchmark tasks: Symbolic inference (e.g., logic chains, graph deductions) Neural tasks (e.g., text/sequence classification) Adaptation under concept drift and system load Measured against industry-grade benchmarks with latency, accuracy, and recovery metrics Technical Documentation & Whitepaper Complete technical reference (≈50 pages), including data schemas, component blueprints, fault logic trees Whitepaper summarizing theoretical grounding, architecture rationale, and experimental findings Public Demo Portal & Code Repository GitHub repository (MIT or custom license) with versioned modules 2–3 minimal example applications (e.g., logic-enhanced chatbot, real-time inference pipeline) Hosted documentation site or developer portal with onboarding flow

$30,002 USD

Deployment Completeness Kernel is containerized and deployable on a GPU-enabled system with minimal configuration Includes orchestration layer with monitoring and fault-tolerance enabled System successfully initializes, evolves, and executes reasoning + inference cycles in multiple environments Benchmark Validation Passes ≥90% of designed functional and stress tests Meets key performance targets: Inference latency <200ms (neural + symbolic) Recovery time ≤5s after fault injection Adaptation to drift with <5% accuracy drop and successful architecture evolution within 3 cycles Transparency & Documentation Whitepaper published with full internal structure explained All public modules documented and reproducible Installation, use, and extension instructions tested with external users (e.g., beta contributors or advisors) Community Integration GitHub repository open, licensed, and ready for forks/pull requests Public demo apps running and reproducible Developer onboarding materials available, including diagrams, CLI/API commands, and example workflows This milestone will demonstrate that the neuro-symbolic AGI kernel is not only functional and self-adaptive, but also ready for external adoption, experimentation, and collaborative advancement.

Reviews & Ratings

Please create account or login to write a review and rate.

Check back later by refreshing the page.

© 2025 Deep Funding

Join the Discussion (0)

Please create account or login to post comments.