DEEP Connects Bold Ideas to Real World Change and build a better future together.

Coming Soon

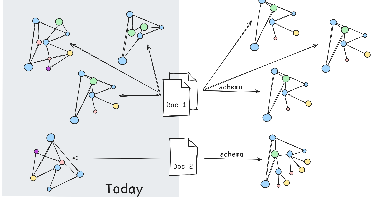

Our team is committed to solving one of the most significant challenges in knowledge representation for AGI: ensuring reproducibility in knowledge graphs generated from unstructured text data. We're proposing a comprehensive suite of open-source tools and methodologies to enforce consistency, reduce variability, and automate schema learning for LLM-generated knowledge graphs. What drives our enthusiasm is the potential to dramatically improve trust, reliability, and interoperability in neuro-symbolic AI systems - with particular attention to integration with OpenCog Hyperon, MeTTa, and the MORK backend for robust knowledge representation.

This RFP seeks the development of advanced tools and techniques for interfacing with, refining, and evaluating knowledge graphs that support reasoning in AGI systems. Projects may target any part of the graph lifecycle — from extraction to refinement to benchmarking — and should optionally support symbolic reasoning within the OpenCog Hyperon framework, including compatibility with the MeTTa language and MORK knowledge graph. Bids are expected to range from $10,000 - $200,000.

We will develop a comprehensive framework that enforces consistency in LLM-generated knowledge graphs through schema guidance. This framework will include intelligent schema extraction techniques, pattern-based extraction mechanisms, and template-based prompting strategies specifically designed to reduce hallucinations due to more structured graphs.

- A fully documented Python library implementing schema-guided extraction pipelines - A suite of optimized prompting templates demonstrating at least 40% improvement in extraction accuracy compared to unconstrained approaches - An evaluation report comparing the framework's performance across different domains, documenting consistency improvements and hallucination reduction

$25,000 USD

The framework demonstrates consistent knowledge graph generation with less than 25% structural variability across multiple runs using identical inputs. Extraction accuracy improves by at least 25% compared to baseline unconstrained LLM approaches when validated against human-annotated ground truth data.

We will create automated tools for schema learning and ontology population that transform unstructured text into consistent knowledge structures with minimal human intervention. These tools will combine traditional NLP approaches with LLM capabilities to automatically induce domain schemas and populate ontologies from text corpora. The implementation will prioritize usability for domain experts without requiring specialized knowledge engineering skills.

- A toolset for automated schema induction from domain-specific text with comprehensive API documentation - A hybrid ontology learning system integrating statistical NLP and LLM-based semantic understanding, supporting standard ontology formats

$27,500 USD

The tools successfully generate viable domain schemas from text corpora with at least 80% precision when evaluated against expert-created schemas in three distinct domains. Ontology population accuracy reaches at least 65% for entity classification and relationship extraction when compared to gold standard datasets.

We will establish a comprehensive validation framework and benchmark suite for evaluating reproducibility in knowledge graph generation. This framework will objectively measure consistency, accuracy, and structural fidelity across multiple runs of the generation process. The benchmarks will cover diverse domains and text types to ensure broad applicability and will include both structural and semantic evaluation metrics.

- A validation framework implementing multiple graph comparison techniques (structural, embedding-based, and semantic equivalence) - A benchmark dataset comprising varied domains with gold standard knowledge graphs for reproducibility evaluation - A detailed methodology document and evaluation protocol for standardized testing of knowledge graph generation reproducibility

$27,500 USD

The validation framework provides quantifiable metrics that correlate with human judgments of knowledge graph quality and consistency. The benchmark suite demonstrates discriminative power by effectively distinguishing between systems with different levels of reproducibility and consistency.

We will develop seamless integration between our reproducible knowledge graph generation tools for the MeTTa/MORK symbolic reasoning systems. The implementation will preserve semantic fidelity throughout the pipeline while ensuring compatibility with existing MeTTa/MORK workflows.

- Integration adapters for direct incorporation of extracted knowledge into reasoning workflows - A demonstration system showcasing end-to-end knowledge extraction and reasoning across at least two complex domains

$7,500 USD

Knowledge extracted via our framework can be successfully utilized in MeTTa/MORK reasoning tasks with equivalent semantic accuracy to manually encoded knowledge.

Reviews & Ratings

Please create account or login to write a review and rate.

Check back later by refreshing the page.

© 2025 Deep Funding

Join the Discussion (0)

Please create account or login to post comments.