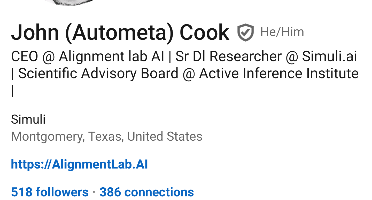

Austin Cook

Project OwnerProject manager / research director Remaining members tbd based on timing and project status

DEEP - Where bold, bright and beneficial ideas are turned into real world solutions to create a better future for all!

Coming Soon

To construct a publicly accessible pool of continuously evolving reasoning traces from human and frontier inferences, by leveraging pyreason and custom trained networks for extraction (a semantic vector quantizer) to produce stable and internally logically consistent chains of thought in an easily scalable database of self consistent relationships, which we offer for free as an API to help validate frontier and personal LLMs which allows us to distill from their internal world models to contrast and debias a globally accessible graph of distilled knowledge

This RFP seeks the development of advanced tools and techniques for interfacing with, refining, and evaluating knowledge graphs that support reasoning in AGI systems. Projects may target any part of the graph lifecycle — from extraction to refinement to benchmarking — and should optionally support symbolic reasoning within the OpenCog Hyperon framework, including compatibility with the MeTTa language and MORK knowledge graph. Bids are expected to range from $10,000 - $200,000.

Most of the work will be in aggregating and analyzing the results of the research we already have done determining what experiments are left to run and what is going to be optimal as a high level structure for the system pipelines.

Compiled detailed documentation and correlations drawn from a well structured hierarchical disambiguation of research artifacts a plan for next research objectives and a box chart detailing the higher level code structure

$50,000 USD

We feel strongly that the system we propose is still the optimal strategy, and that the most efficient and cost effective methods have been implemented in a manner that makes little/no performance or functionality sacrifices.

If any further studies are required to get a strong understanding of the optimal forward moves this stage is where they should be completed.

A finalized version of the pre deployment research artifacts and study statistics

$50,000 USD

Noted in description.

Finalize and populate graph database with seed data to create an initial skeleton of human validated (or strongly validated) data to enable a robust network of correlations downstream when public model reasoning traces are collected

An API endpoint on scalable CPU based compute infrastructure designed to leverage our algorithmic and implementation efficiencies to create a high quality and scalable access point to validate collect curate graph and contrast chains of LLM reasoning from the public user base.

$50,000 USD

We successfully reduce hallucinations and provide quality/utility inference advantages for LLM outputs that our community of devs and researchers begin to routinely use the infrastructure we build

Apply stable infrastructure that can provide an easy means for users to unify the performance and enhance the factual accuracy of any LLM while simultaneously contributing to the growing pool of validated casual correlations continuously

A stable easily hosted single source of truth style database of descriptive correlations about reality built to grow more accurate and detailed over time through user and developer curation.

$50,000 USD

We have achieved a means of allowing for a pool of growing performance such that the incentive to produce powerful AI is more oriented towards the public ecosystem, rather than the monolithic "moats" of the large API providers.

Reviews & Ratings

Please create account or login to write a review and rate.

Check back later by refreshing the page.

© 2025 Deep Funding

Join the Discussion (0)

Please create account or login to post comments.